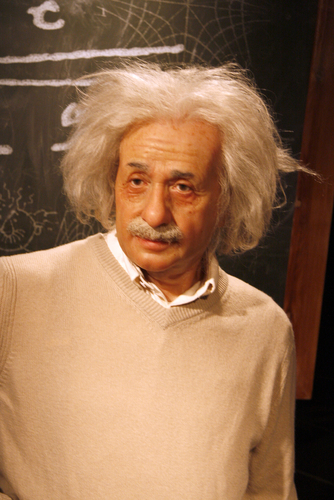

The phrase, “it doesn’t take an Einstein” is an all-purpose IT department put-down that translates roughly as “it’s so obvious, you don’t need to be a genius to see the right decision to make.” Applied to selecting a cloud computing vendor, this principle would result in the clear understanding that people want reliable performance at a fair market price, with no hidden charges.

Cloud performance has a direct impact on what you spend on compute resources, how you decide the right host for your workload, and how you choose to scale when the need arises. If a service provider offers “fastest performance” at a good price, that’s the right choice, isn’t it? You want the best performance for the money, right? It doesn’t take an Einstein to see that. Except, sometimes it does.

To paraphrase Einstein’s theory of relativity, one’s perception of reality depends on whether one is in motion. Two parties that are in motion will see each other differently depending on their relative speeds and directions. Cloud computing reveals a correlated concept. There is no “top performing” or “fastest” cloud infrastructure. There is only the infrastructure that will perform best for your specific workloads. It’s all relative. At least in this case, thinking like Einstein can help us make the right choice relative to our needs.

What are your cloud workloads? What performance requirements do they need to meet? Different applications and use cases put stress on different parts of the infrastructure. Some need more RAM. Others need more CPU capacity. Others still will see critical performance depend on Input/Output Operations per Second (IOPs). The network and storage are also significant factors in what we would consider overall application performance in the cloud, even if they have nothing to do with the actual hardware that’s doing the computing.

The best practice is to look at specific performance benchmarks for cloud infrastructure relative to your specific workloads. To help our clients accomplish this goal, CenturyLink Cloud recently used CloudHarmony, an objective third party, to measure the baseline performance of our infrastructure offerings. We wanted to understand how well we stacked up against other cloud players in performance areas that mean different things to different buyers.

One thing that CloudHarmony measured was our IO profile (disk read/write) speed for large block sizes. Why does this matter? If you’re running Microsoft SQL Server database, for example, you will likely be working with 64k blocks. You want to run that kind of workload with a cloud provider that will give you persistent storage and high performance for the large 64K blocks but not charge you for IOPs. This will result in predictable costs and fewer resources needed to achieve optimal performance.

A similar issue arises with the paradox of choice in cloud servers. When you’re faced with dozens of server types to choose from, you find yourself selecting a “best fit” that may compromise in one area (“too much RAM!”) in order to get another (“need 8 CPUs”). In CenturyLink Cloud, we have two classes of servers (Standard and Hyperscale) and both have shown to have reliable performance. Pick whatever amount of CPU or memory that makes sense – which is how traditional servers have always been purchased. If built-in data redundancy doesn’t matter, but reliable, high performance does, you could select our Hyperscale server. If you need strong, consistent performance but want daily storage snapshots and a SAN backbone, you would be best off with our Standard servers. What matters is your specific requirement, not ours. It’s relative. Einstein would approve.

By Richard Seroter