With two-in-three U.S. adults, or 64 percent, saying that fake news stories are causing a great deal of confusion about current events, one cannot ignore impact of fabricated news on today’s society. According to a study by Pew Research, 45 percent of Americans think government, politicians and elected officials should deal with the mass occurrence of fake news while 42 percent believe that social networking sites and search engines should take responsibility for fighting fabricated news. Whomever the public opinion is considering in charge of dealing with fake news, only tools using artificial intelligence (AI) can succeed in finding, filtering and eventually removing made-up news online. Although human intervention is required in many cases, AI is much more capable of instantly recognizing a fake news story and taking the required actions.

The Fake News Challenge organization, for Instance, is proposing an algorithm based on comparison of a topic’s coverage by multiple news organizations. They call this process Stance Detection that is based on evaluating the relative perspective of two pieces of text relative to a topic, claim or issue. Comparing, with the assistance of AI, the stance of a body text in relation to a headline allows for determining if the body text agree, disagree, discuss or is unrelated to the headline. Then AI can define the body text as fake or factual news in agreement with pre-defined rules and algorithms.

Other large organizations are working on development of such algorithms as well. A team of Google researchers published a paper in 2015, defining a new method for estimating the trustworthiness of a web source i.e. webpages or online documents. Their technology enables search engines to understand a page’s context without relying on third-party indications such as links. Google’s algorithm assigns a trust score that in fact distinguishes between made-up content and trustworthy content on the web.

At state level, the European Union is investing in Pheme, a technology aiming to identify speculation, controversy, misinformation and disinformation on social media and in the online space as a whole. Pheme’s creators admit it is extremely difficult to assess whether a piece of information belongs to one of the four categories, especially in the context of social media. Nonetheless, Machine Learning and deep learning technologies can help Pheme progress toward a viable solution.

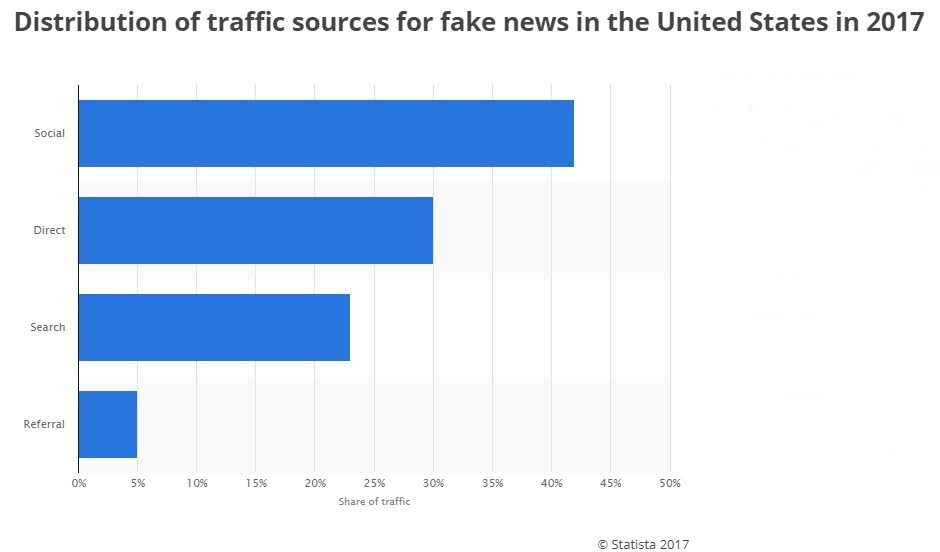

Those are all critical technologies since the fake news phenomenon is gaining traction with websites small and large using made-up news or deceptive headlines to profit from traffic and gain attention. With majority of online users looking to social media for news information, the problem of fake news is becoming a real issue, especially when politics, economy and human dignity is involved. Another issue with social media is that most people would repost or share a news after reading only its headline, which helps the spreading of fake news to a vast audience.

AI systems, however, are already able to scan and analyse videos, images and audio, in addition to text, and apply machine-learning algorithms to detect made-up news stories. Moreover, the recent rise of the number of fake news online is only helping machine learning systems to better recognise fabricated news and then apply AI to remove those news. Natural Language Processing (NLP) is another promising technology that AI can use to track how different media outlets cover particular news events and determine whether a site has fabricated a fake news on a certain topic.

Those AI-powered tools are still to learn how to identify a fake news in context, though. Even the simplest AI system is able to easily spot an incorrect fact but only a few algorithms are even close to identifying the context around the fact. Another limitation of current AI technologies is that they rely on existing online data i.e. already published content to assess the accuracy and reliability of a news story. If a relatively reputable source publishes a fact within a news story where not much context is provided, an algorithm will experience real difficulties determining whether it is a fake news or truthful story.

Much effort is put into algorithms for fighting fake news and some projects show remarkable progress in this field in spite of all obstacles. According to moderate estimates, we’ll have reliable AI tools for fake news flagging and removal within two to five years if a growing number of organizations and nation states invest a little more effort and funds in fighting fabricated stories. After all, fake news are a powerful weapon and AI is the only current technology that can stop the spreading of made-up stories globally.

By Kiril V. Kirilov