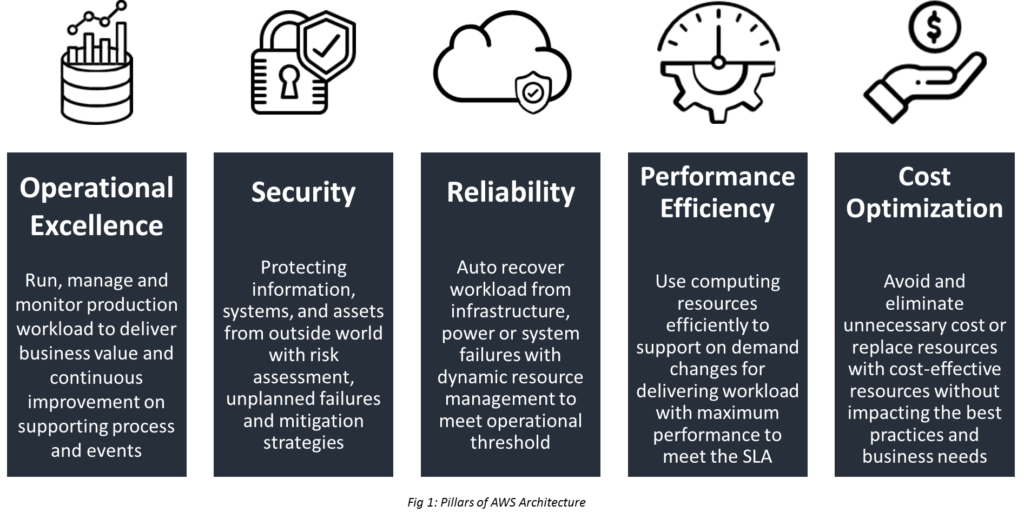

Cloud computing is proliferating each passing year denoting that there are plenty of opportunities. Creating a cloud solution calls for a strong architecture if the foundation is not solid then the solution faces issues of integrity and system workload. AWS 5 pillars help cloud architects to create a secure, high-performing, resilient and efficient infrastructure.

In this post, we shall discuss the five pillars of AWS well-architected framework.

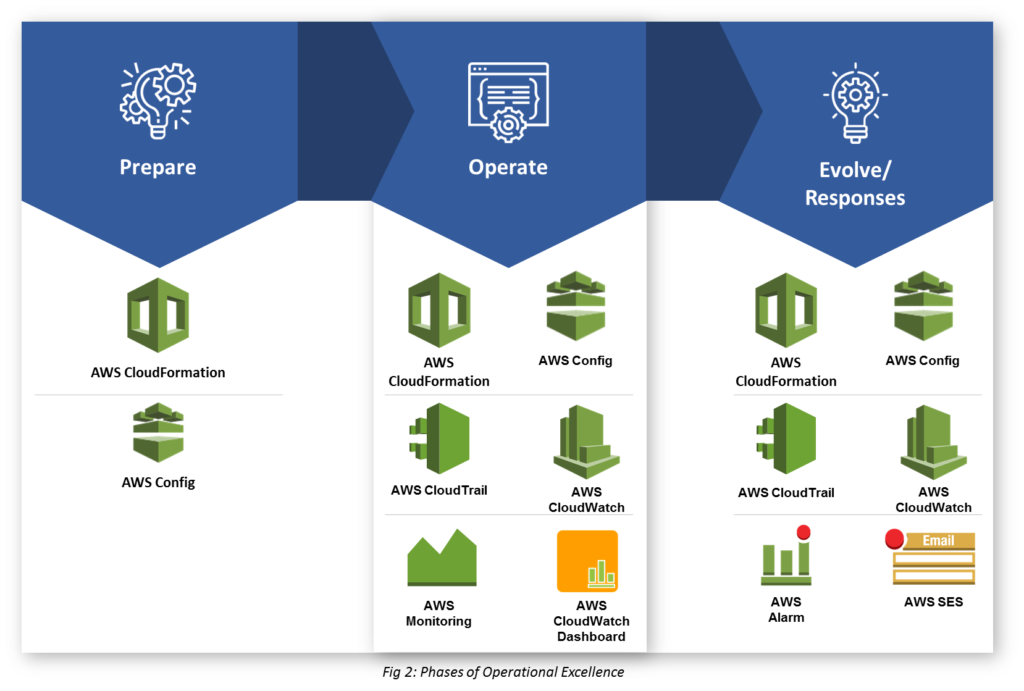

This pillar is a combination of processes, continuous improvement, and monitoring system that delivers business value and continuously improve supporting processes and procedures.

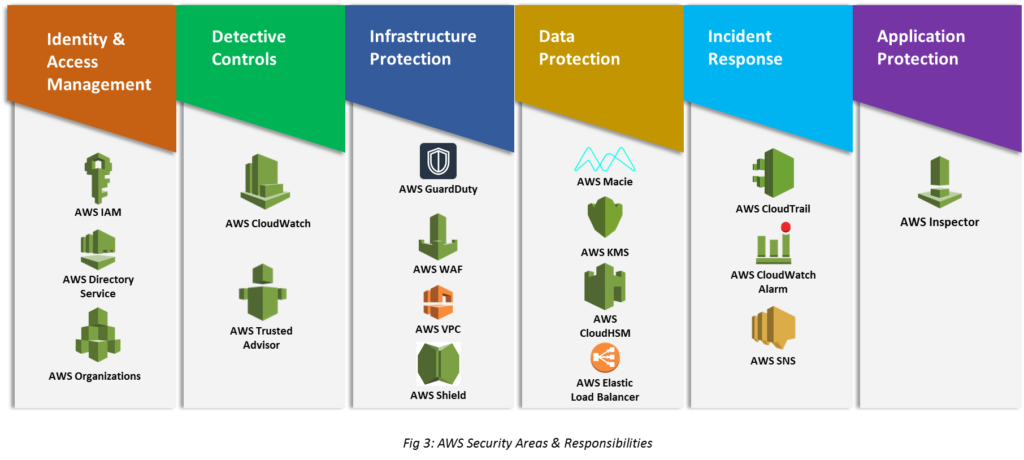

Security pillar centers on protecting information, systems, and assets along with delivering business needs.

Implement a strong identity foundation

Implement the least privilege and enforce authorized access to AWS resources. Design central privilege management and reduce the risk of long-term credentials.

Enable traceability & Security Events

Monitor, alert, audit, incident response of actions and changes in environment real-time. Run incident response simulations and use automation tools upsurge speed for detection, investigation, and recovery.

Apply security at all layers

Apply security to all layers e.g. Network, database, OS, EC2, and applications. Prevent application and infrastructure by human and machine attacks.

Automate security best practices

Create secure architectures, including implementation of controls that are defined, software-based security mechanisms and managed as code in version-controlled templates.

Safeguard data in transit and at rest

Categorize data into sensitivity levels and mechanisms, such as encryption, tokenization, and access control.

Keep people away from data

Create mechanisms and tools to reduce or eliminate the need to direct access or manual processing of data to reduce the risk of loss due to human error.

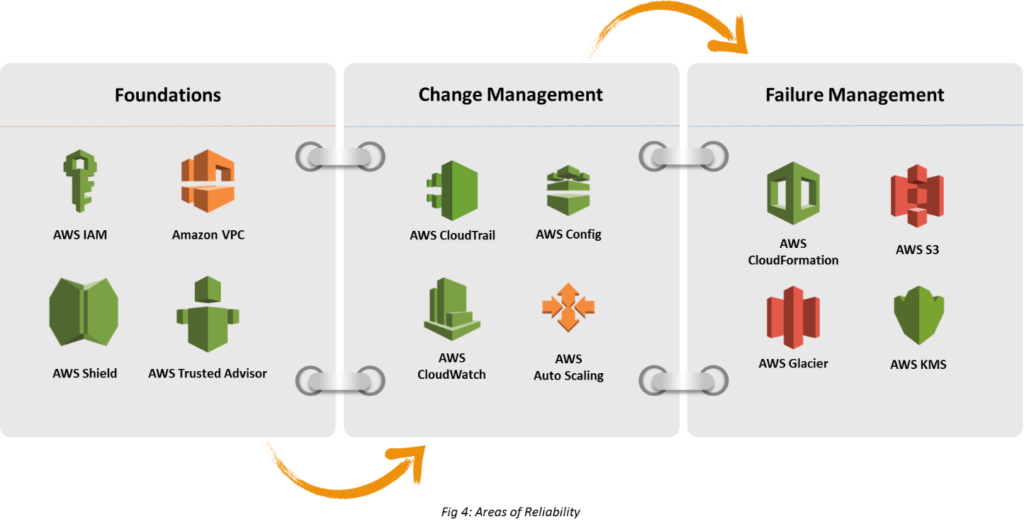

Reliability pillar ensures that a given system is architected to meet operational thresholds, during a specific period of time, meet increased workload demands, and recover from failures with minimal disruption or no disruption.

Test Recovery Process

Use automation to simulate different failures or to recreate scenarios that led to failures. This reduces the risk of components that are not been tested before failing.

Automatic recovery from failure

Enable the system monitoring by KPIs, triggering automation when a threshold is reached. Enable automatic notification and tracking for failures, and automated recovery processes that repair the failure.

Scale horizontally to increase aggregate system availability

Replace one large resource with multiple small resources to reduce the impact of a single failure on the overall system.

Stop guessing capacity

Monitor demand and system utilization and automate the addition or removal of resources to maintain the optimal level.

Manage change in automation

Changes to infrastructure should be done via automation.

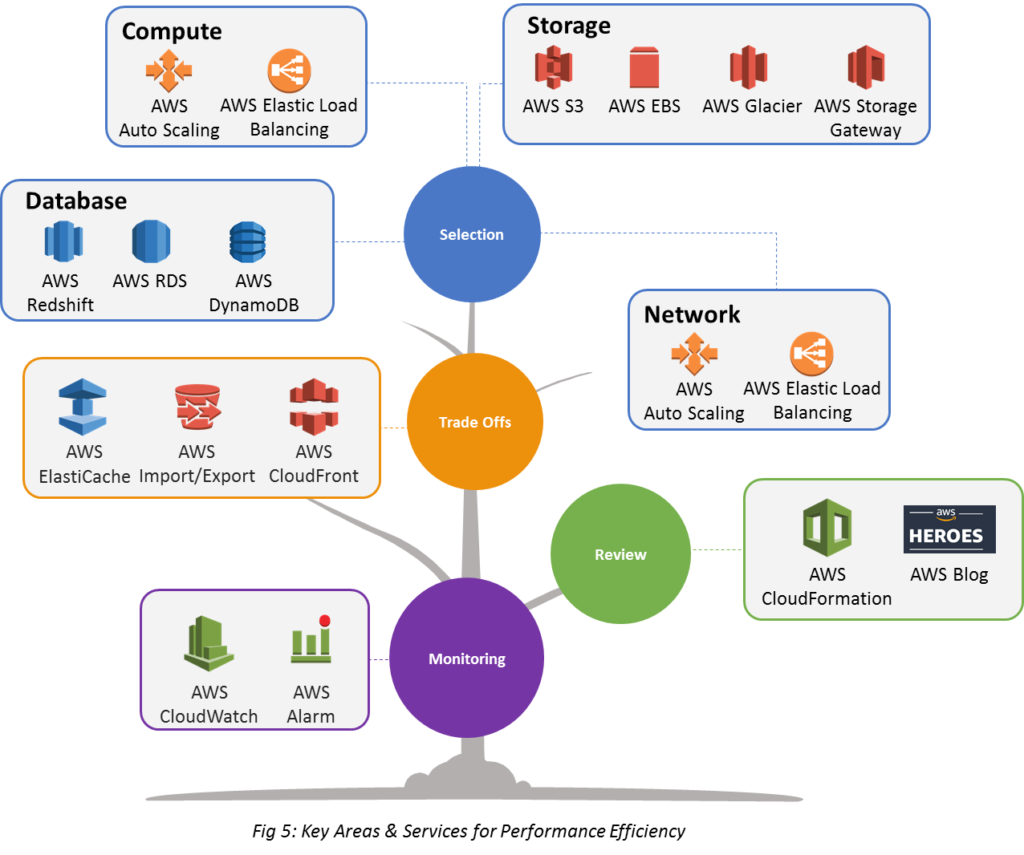

Performance Efficiency focuses on ensuring a system/workload delivers maximum performance for a set of AWS resources utilized (instances, storage, database, and locality)

Democratize advanced technologies

Use managed services (like SQL/NoSQL databases, media transcoding, storage, and Machine Learning) that can save time and monitoring hassle and the team can focus on development, resource provisioning, and management.

Go global in minutes

Deploy the system in multiple AWS regions around the world to achieve lower latency and a better experience for customers at a minimal cost.

Use serverless architectures

Reduce overhead of running and maintaining servers and use the available AWS option to host and monitor infrastructure.

With a virtual and automated system and deployment, it is very easy to test system and infrastructure with different types of instances, storage, or configurations.

Cost optimization focuses on achieving the lowest price for a system/workload. Optimize the cost while considering the account needs without ignoring factors like security, reliability, and performance.

Adopt a consumption model

Pay only for the computing resources you consume and increase or decrease usage depending on business requirements are not with elaborate forecasting.

Measure overall efficiency

Measure the business output of the system and workload, and understand achieved gains from increasing output and reducing cost.

Adopt managed services & stop spending money on data center operations

Managed services remove the operational burden of maintaining servers for tasks like an sending email or managing databases, so the team can focus on your customers and business projects rather than on IT infrastructure.

Identify the usage and cost of systems, which allows transparent attribution of IT costs to revenue streams and individual business owners.

Using AWS well-architected framework and following above discussed practices, one can design stable, reliable, and efficient cloud solutions fulfilling business needs and value.

By Chandani Patel